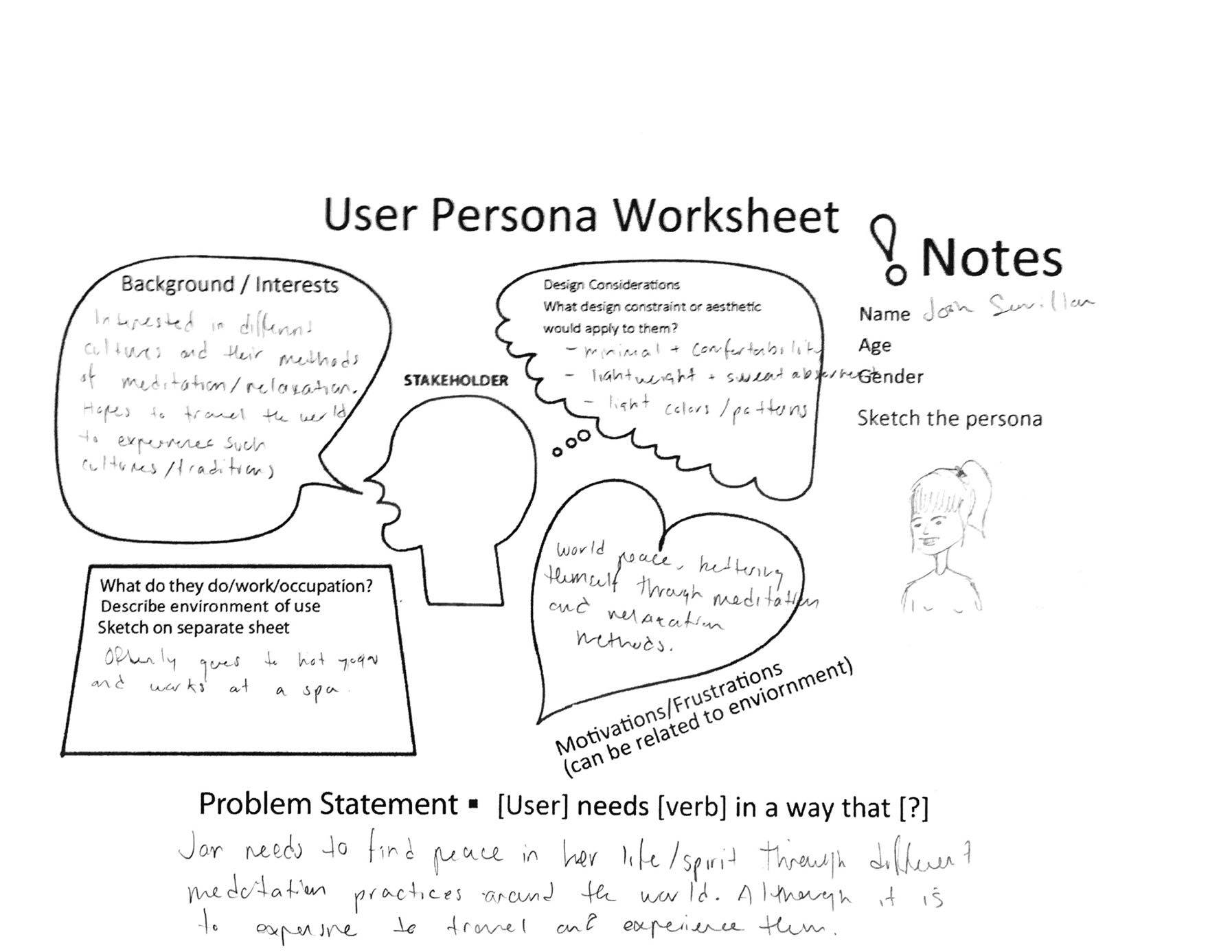

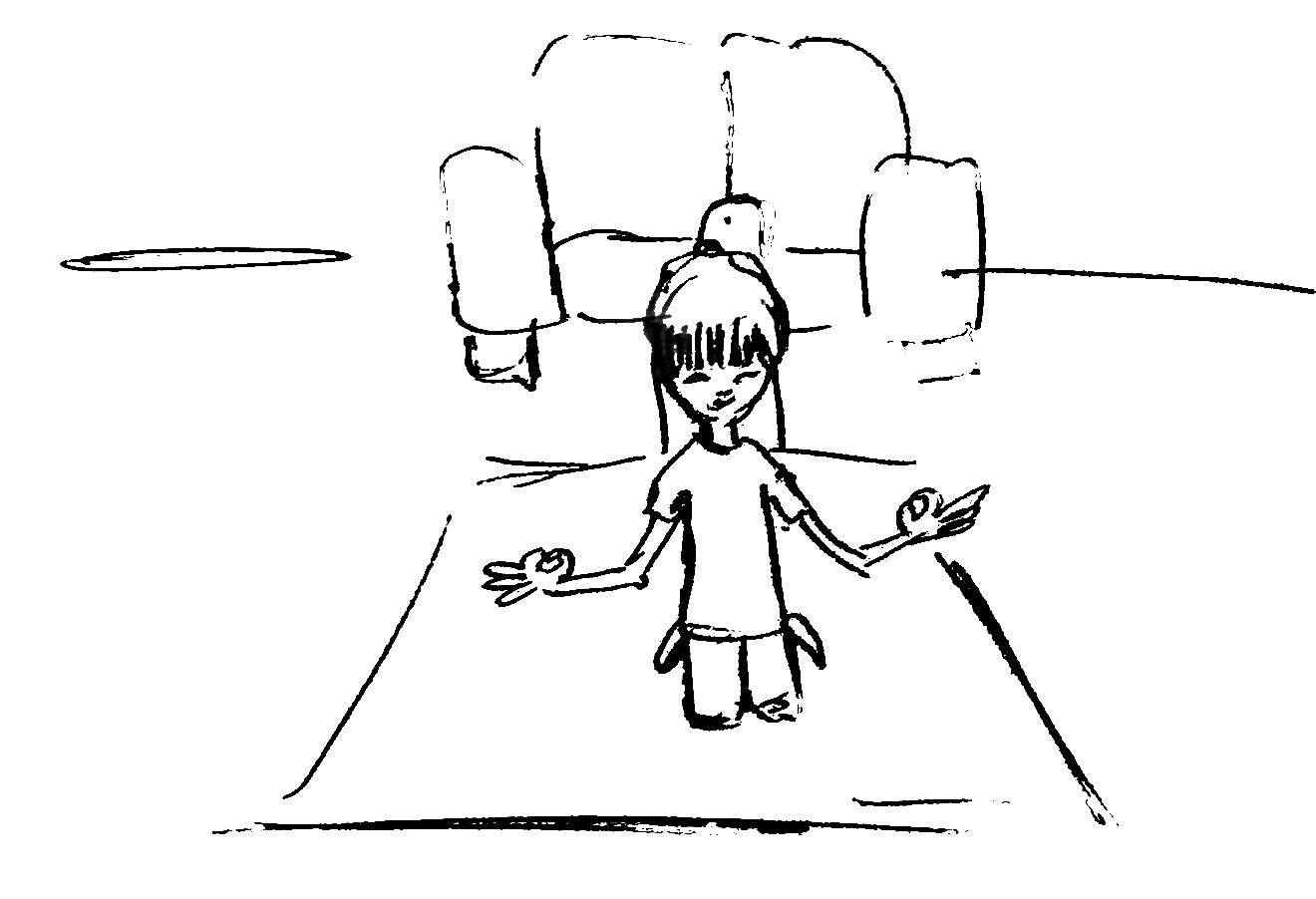

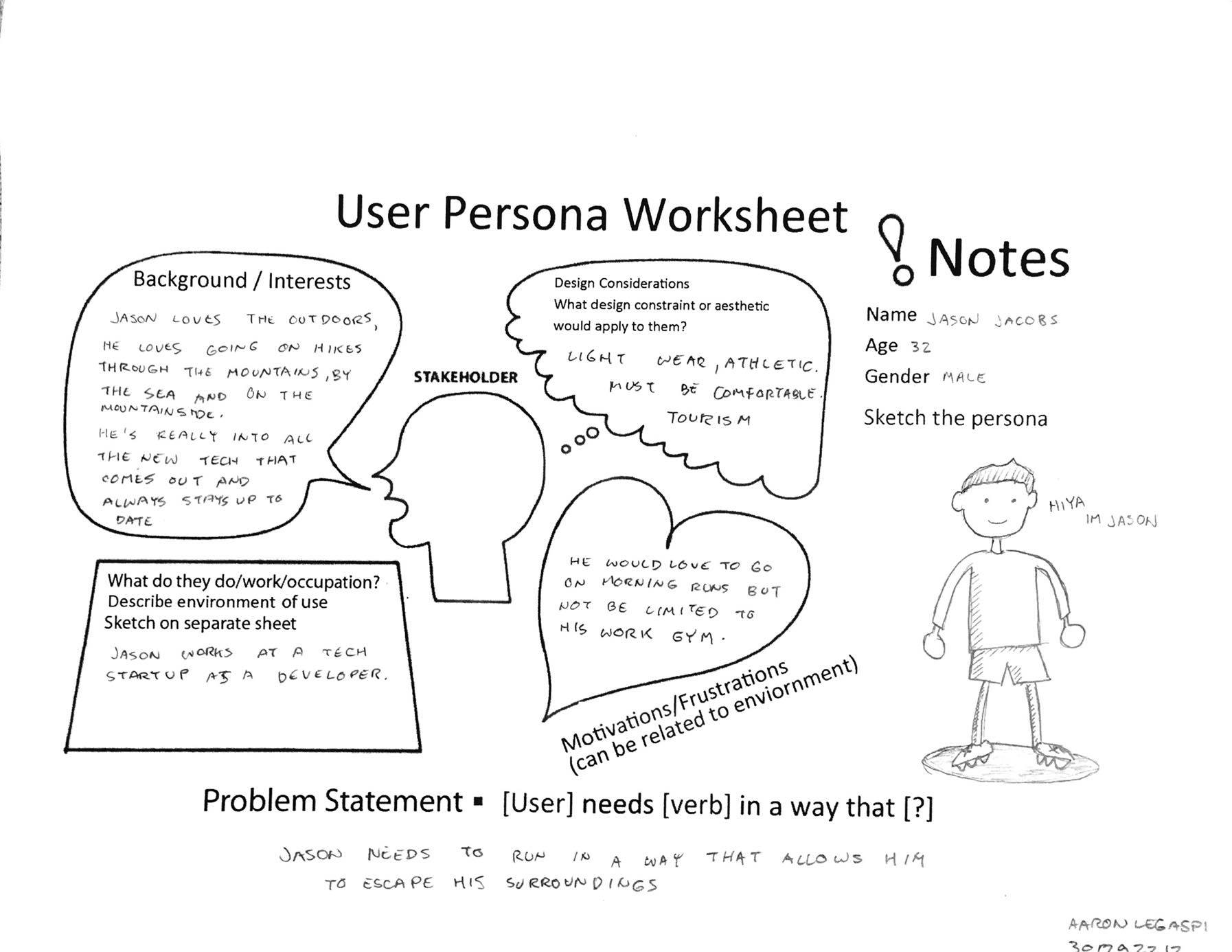

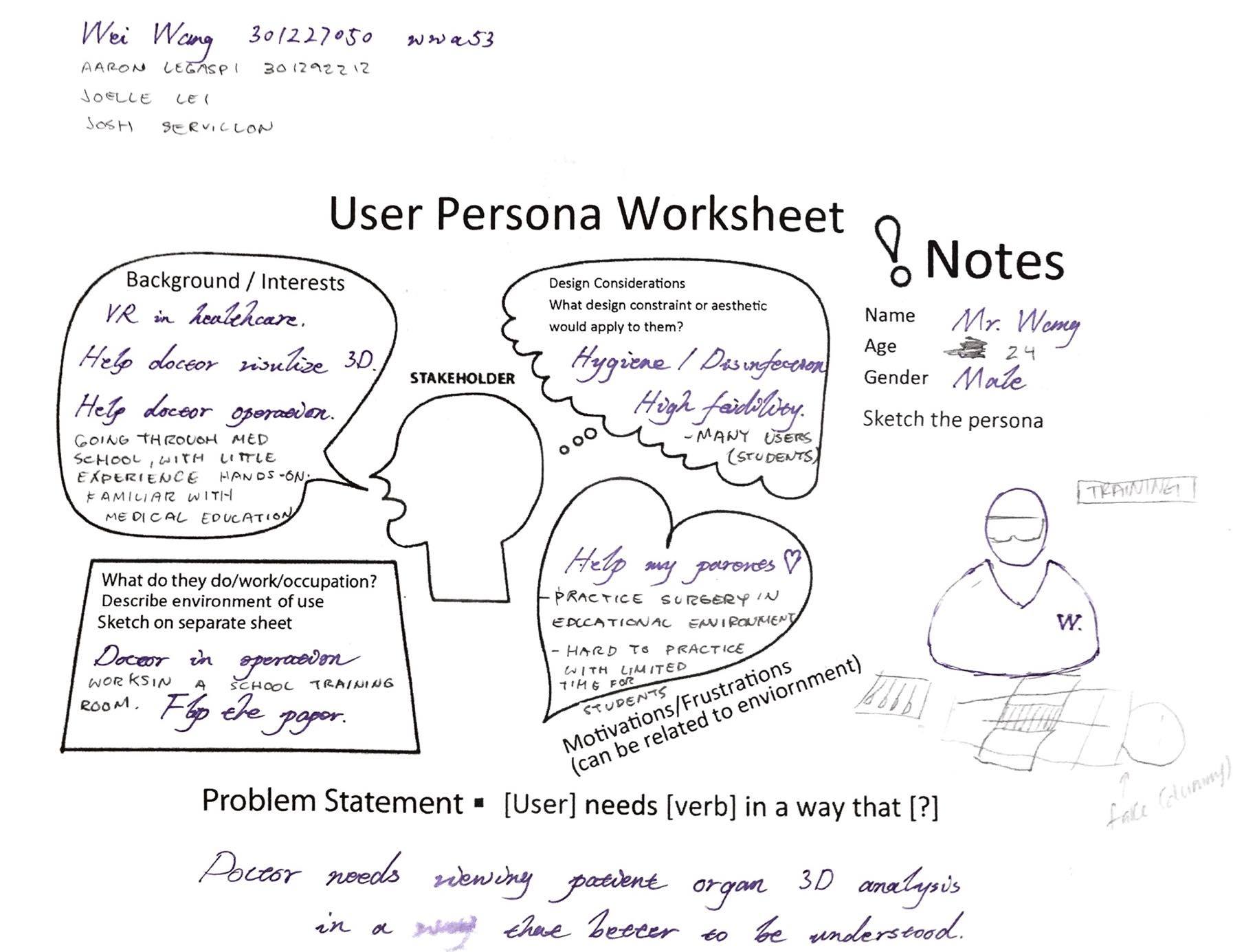

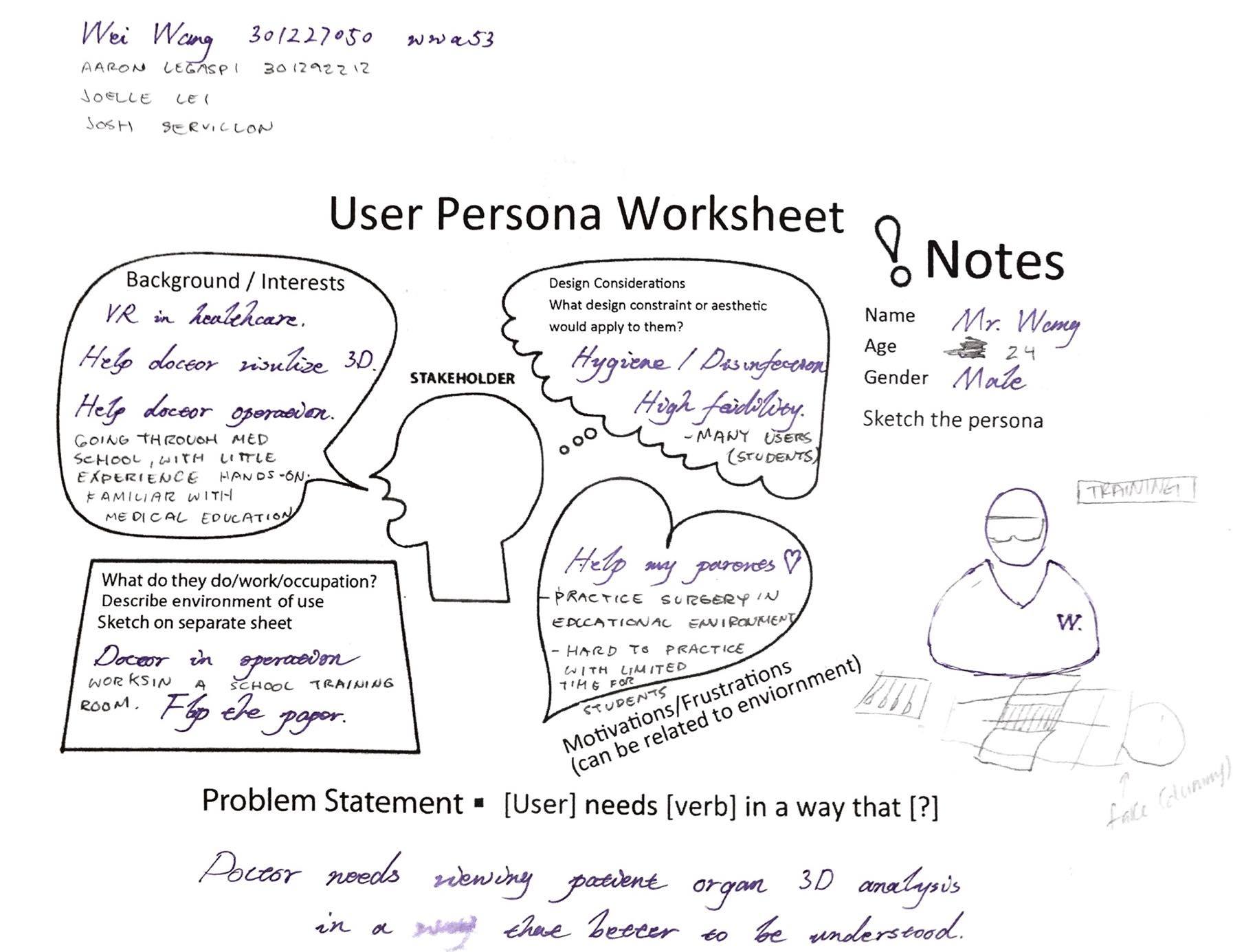

Persona Sketch

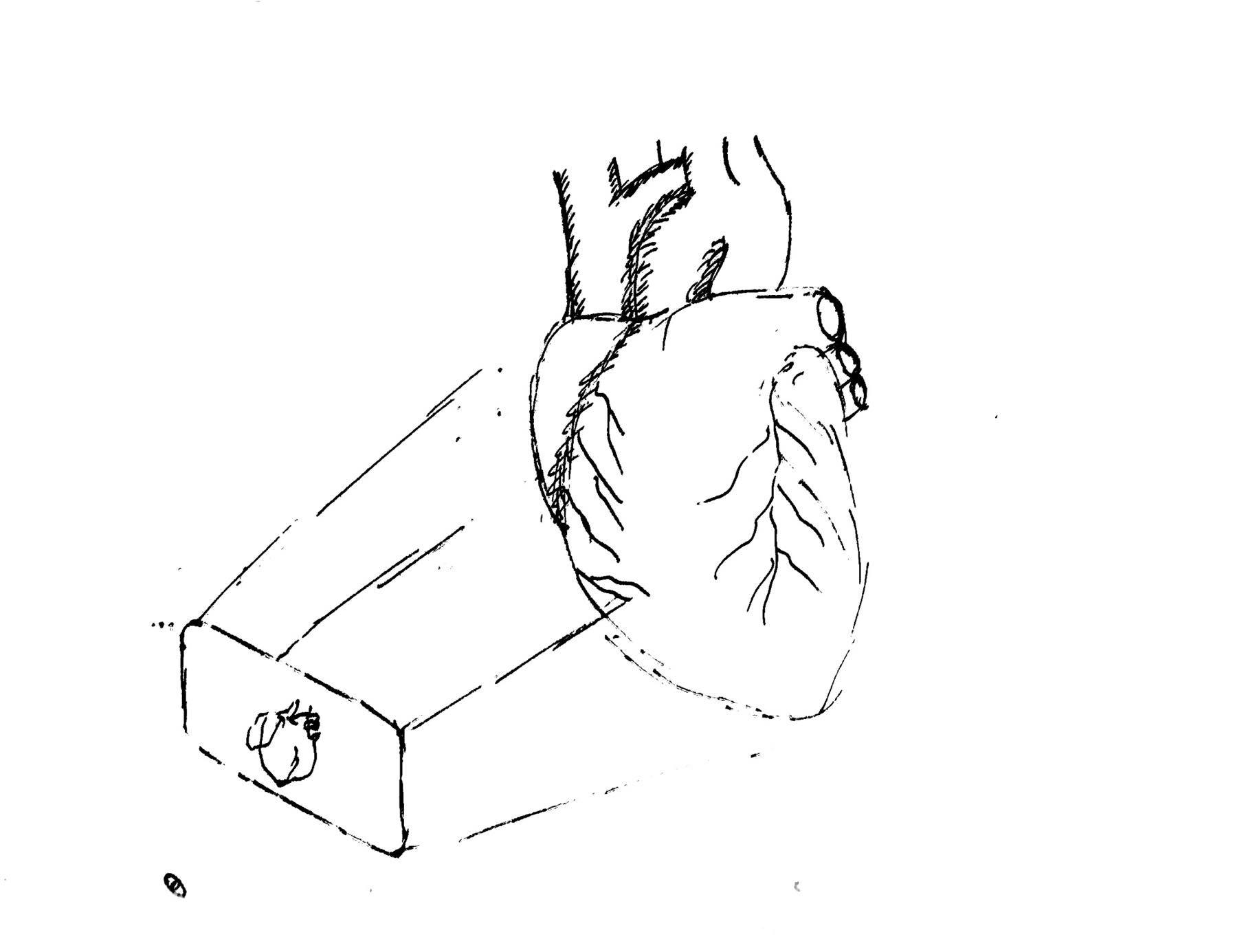

Finalized Idea: Medical Training

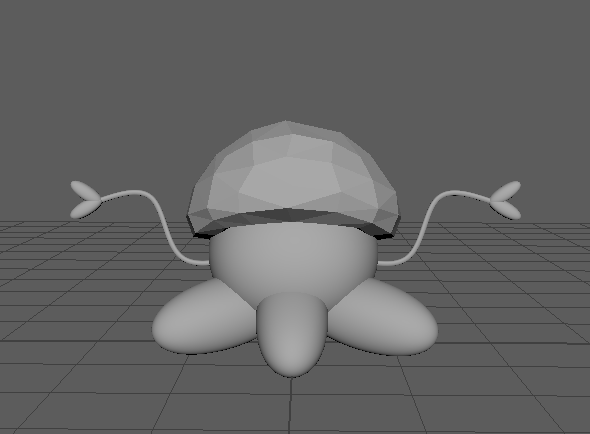

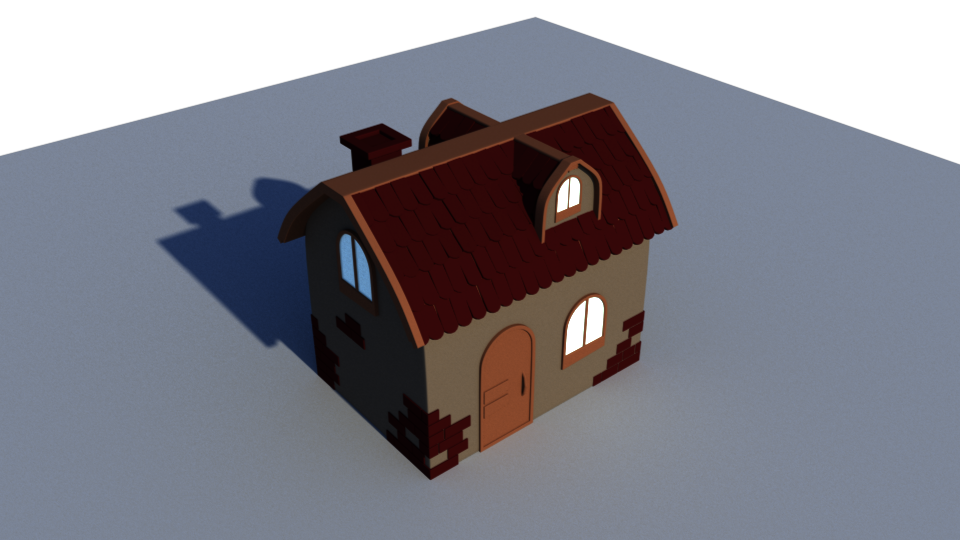

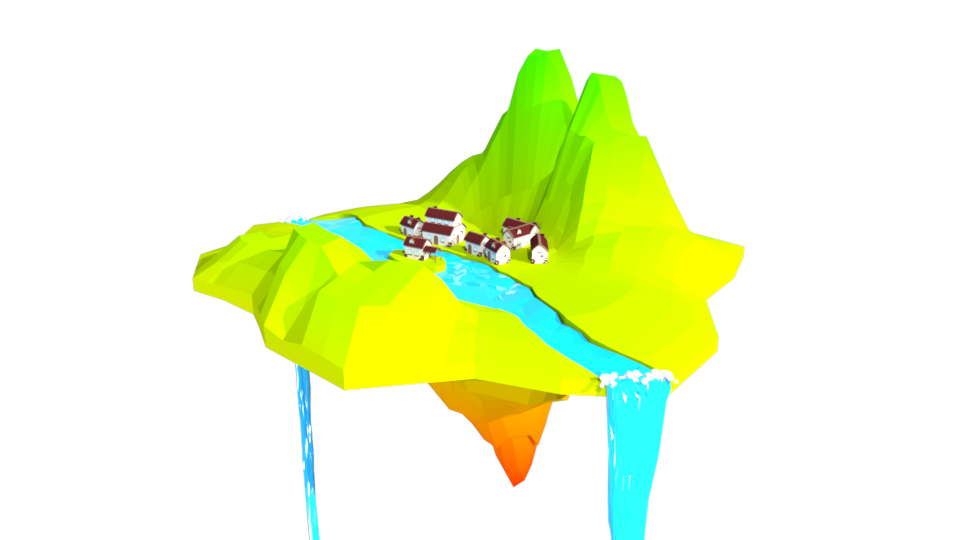

We divided tasks to individuals, Mr. Tentacle, Mr. Hot, alien ship, and village environment. Concept modeling is done with low poly style and bright toon shader.

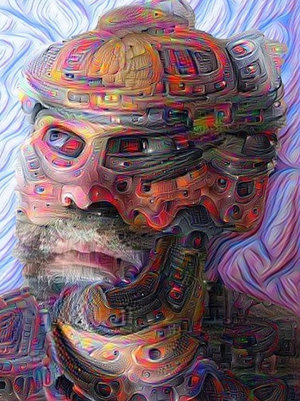

It didn’t take a long time to setup my dev environment. After the remote connection is done, which I have to do since the lab is running on some high-end CUDA machines, few well-labeled programs are gathered my own folder. A quick test is run against a sample video to separate it into frames, apply deep dreaming on the frames, then assemble the frames with sounds back to a dreamed video. The process takes few hours for ~1000 frames and the result is fine.

As mentioned in the previous post, iteration and octave are added by a new parameter pointing to a CSV file as follow:

frame,iteration,octave,layer 33,15,5,Layer 66,5,30,Layer 99,30,10,Layer

These values then can be interpolated linearly or by other models to generate a tuple list with the corresponding iteration, octave, layer (not implemented yet). Every two rows will be processed in one iteration which marks the start and the end of one keyframe section. One trick here is if the first keyframe is not starting from frame 1, the assumption is made that anything between frame 1 and this keyframe will have same iteration, octave, and layer. Same thing applies to the last keyframe and the last frame. The code sample is as follow:

# Must add one extra since frame start from 1 rather than 0

keyFrames = [(iterations, octaves)] * (int(totalFrame) + 1)

if key_frames is not None:

with key_frames as csvfile:

# Get first line to decide if the first frame is defined

reader = csv.reader(csvfile, delimiter=',', quotechar='\'')

reader = csv.reader(csvfile, delimiter=',', quotechar='\'')

next(reader, None) # Skip the header

firstLine = next(reader, None)

if firstLine[0] != '1':

previousRow = firstLine

previousRow[0] = '1'

else:

previousRow = ''

# Rewind the reader and read line by line

csvfile.seek(0)

next(reader, None) # Skip the header

for row in reader:

if previousRow != '':

interpolate(keyFrames, previousRow, row, 'Linear')

previousRow = row

# Check last line and end interpolation properly

if row[0] != str(totalFrame):

lastRow = row[:]

lastRow[0] = str(totalFrame)

interpolate(keyFrames, row, lastRow, 'Linear')

After these sections are prepared, interpolation function is called. Currently, only the simple linear model is implemented where more advanced ones can be introduced in the future. One thing should keep in mind is that frame always starts from 1 rather than 0. The code sample is as follow:

def interpolate(

keyFrames,

previousRow,

currentRow,

method

):

iterationFragment = (float(currentRow[1]) - float(previousRow[1])) /\

(float(currentRow[0]) - float(previousRow[0]))

octaveFragment = (float(currentRow[2]) - float(previousRow[2])) /\

(float(currentRow[0]) - float(previousRow[0]))

for i in range(0, int(currentRow[0]) - int(previousRow[0]) + 1):

iteration = str(int(float(previousRow[1]) + i * iterationFragment))

octave = str(int(float(previousRow[2]) + i * octaveFragment))

keyFrames[int(previousRow[0]) + i] = (iteration, octave)

Apparently, I’m a Python hater 😀 One advice I’m always giving is do not start your programming career with Python. Reseason behind this is Python is such a different language compares to C-like style, fully adapt to Python will cause people difficult to move to other languages, i.e. I have friends tend to keep forgetting a variable need a type and how type casting works when moving from Python to others.

Other things are just pure basics, indentation rather than {}, don’t need to declare a variable before using it, no strict type. It literally dodged every single criterion where I think a good programming language should have. However, its “simplicity” and some well-supported science libraries made it gaining popularity in the research world. Nevertheless, one thing I learned from the past is if you are seriously thinking to work with one language style, you’d better choose the one pleasing you when writing.

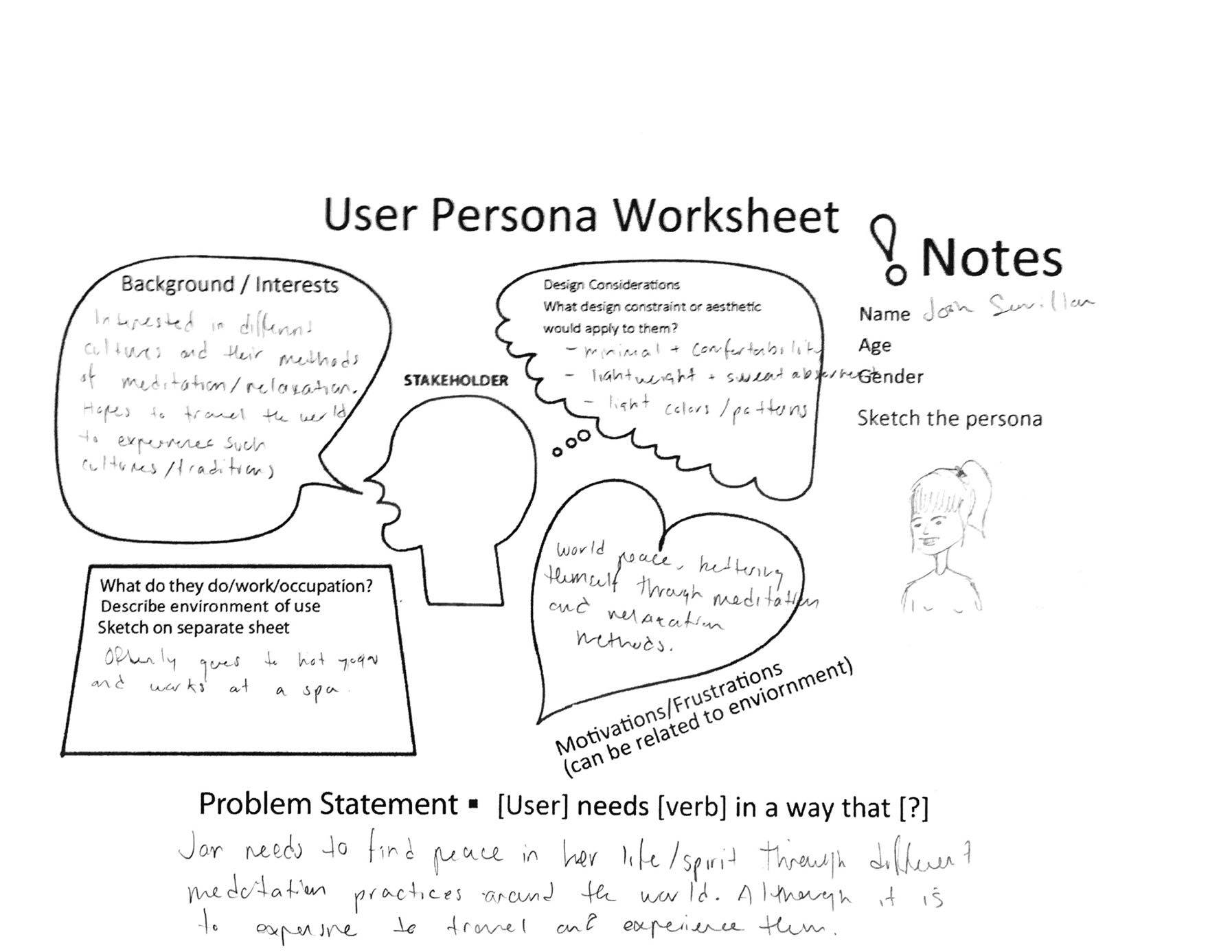

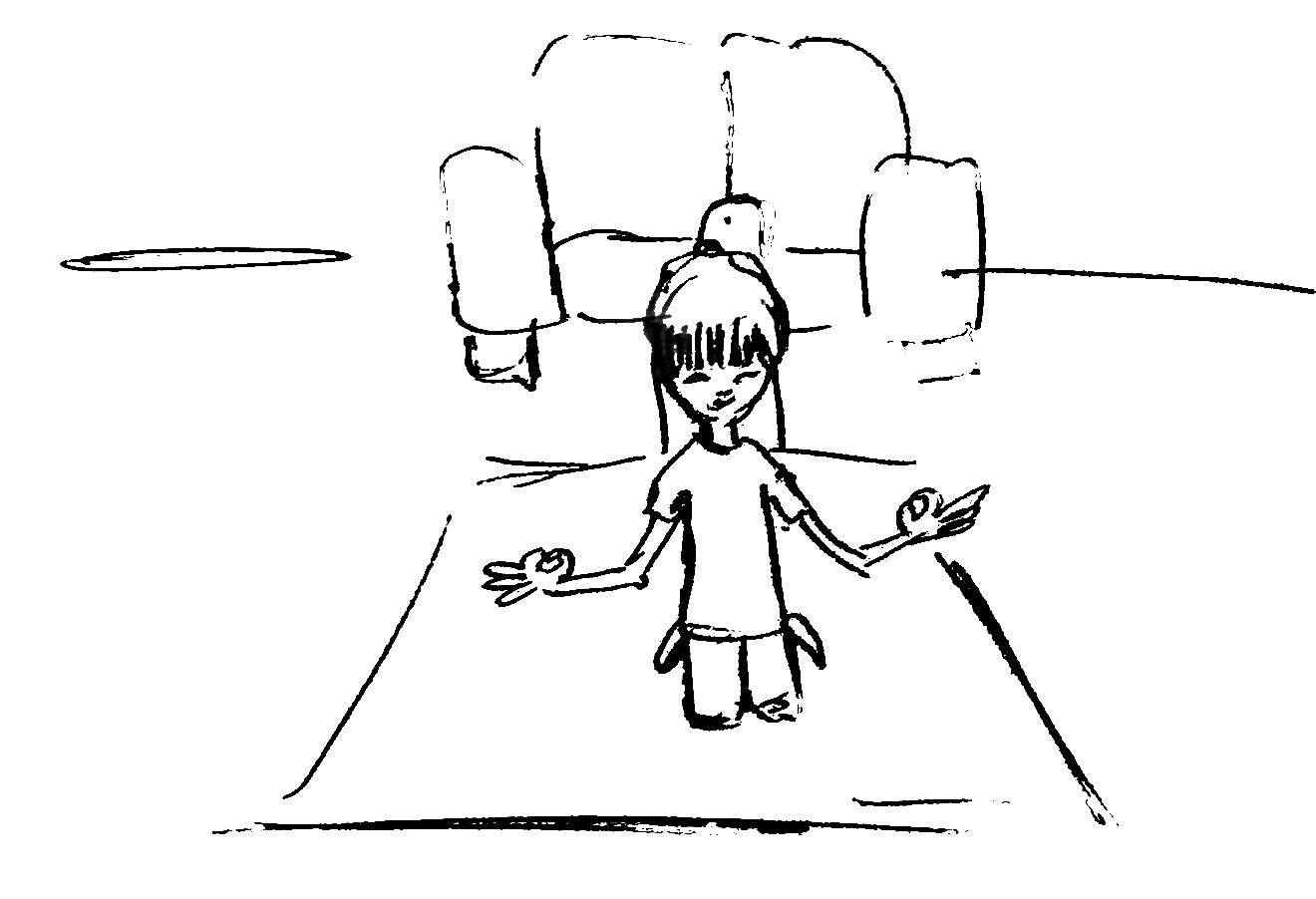

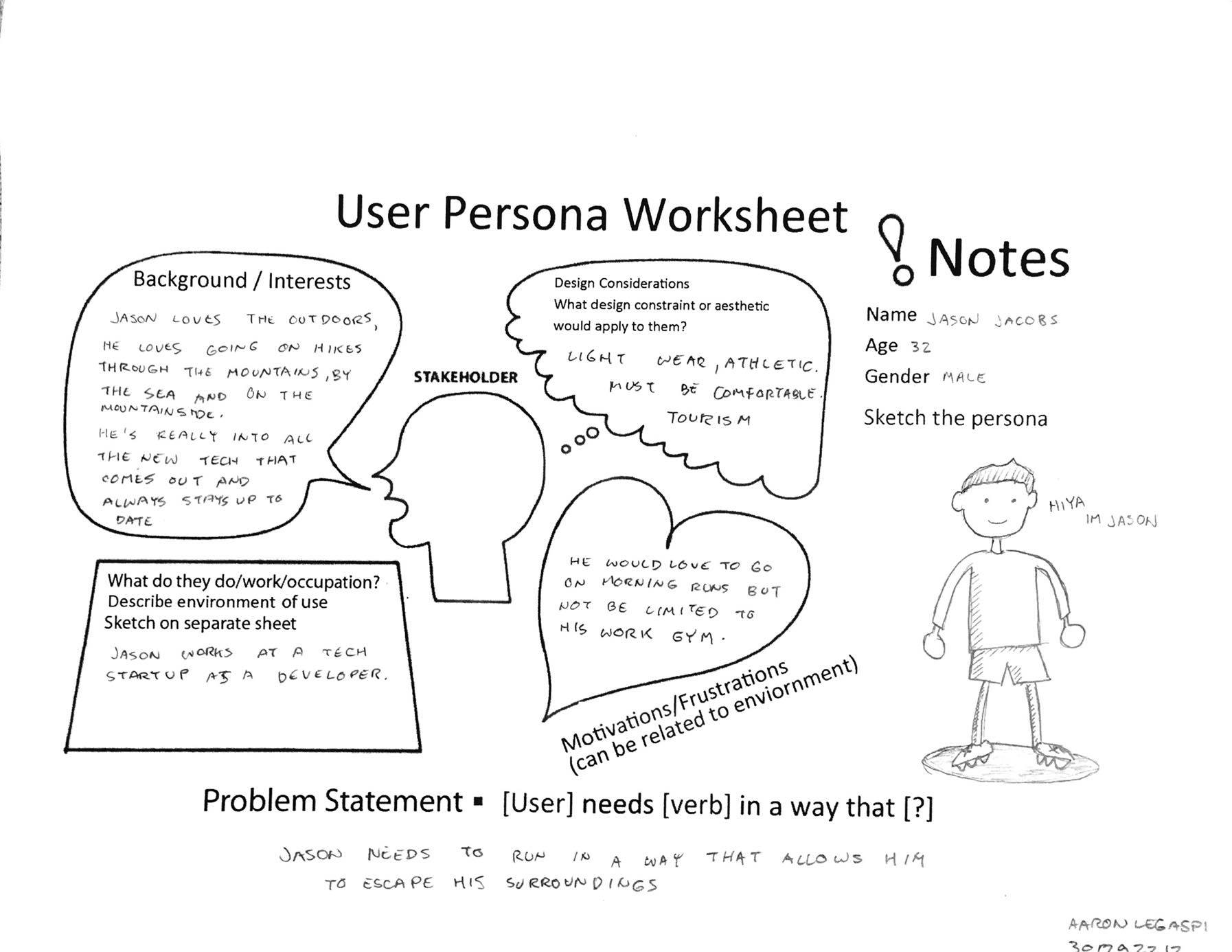

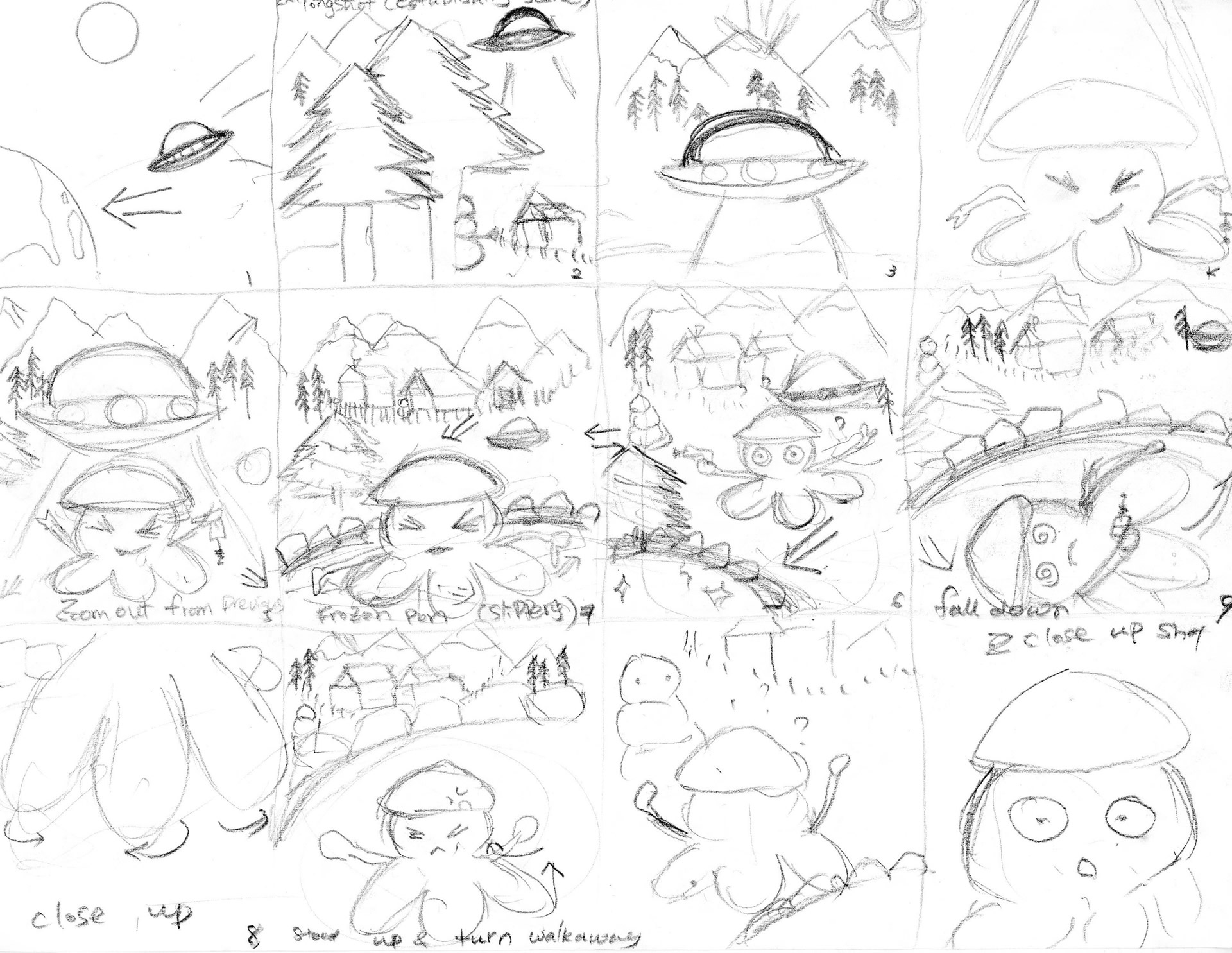

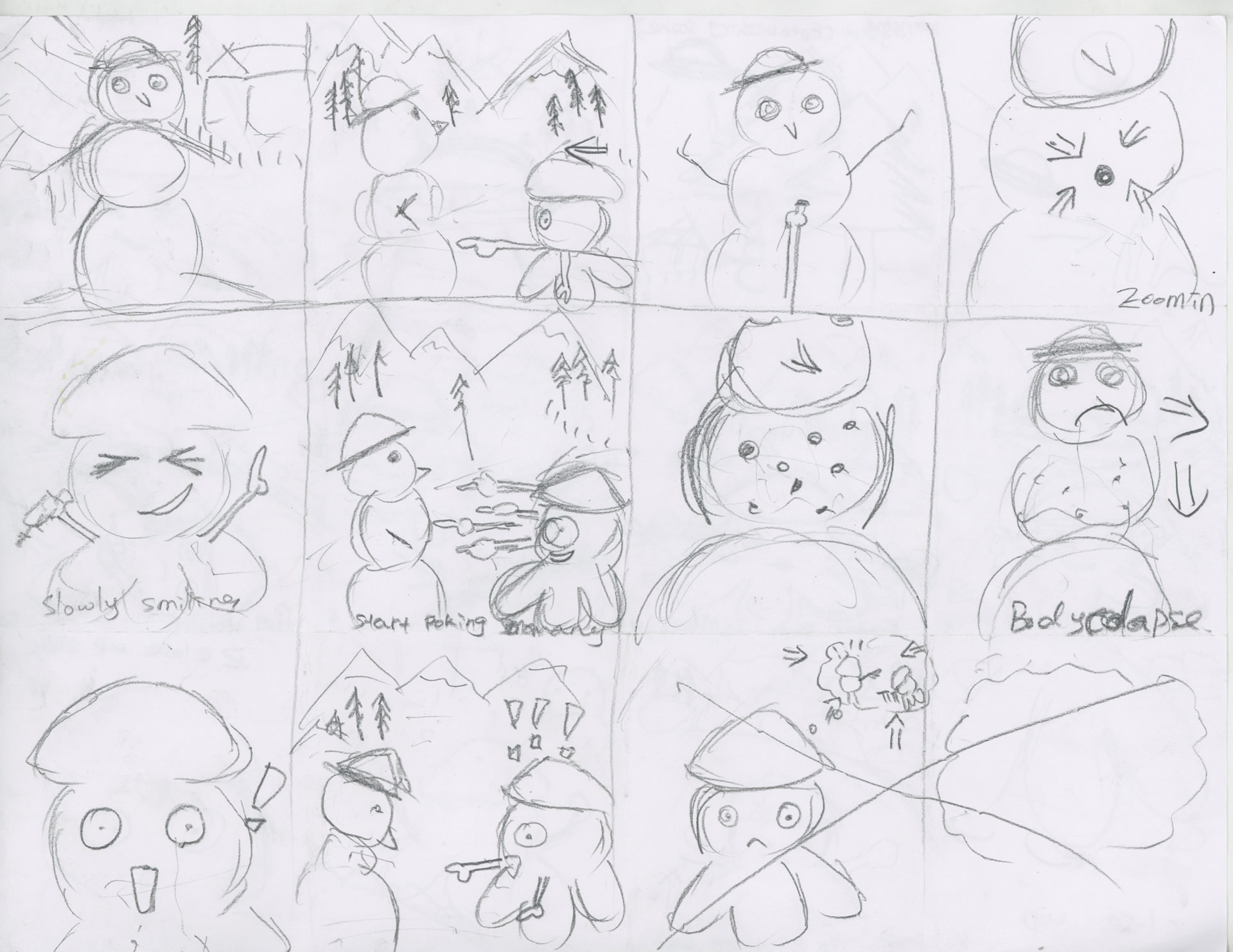

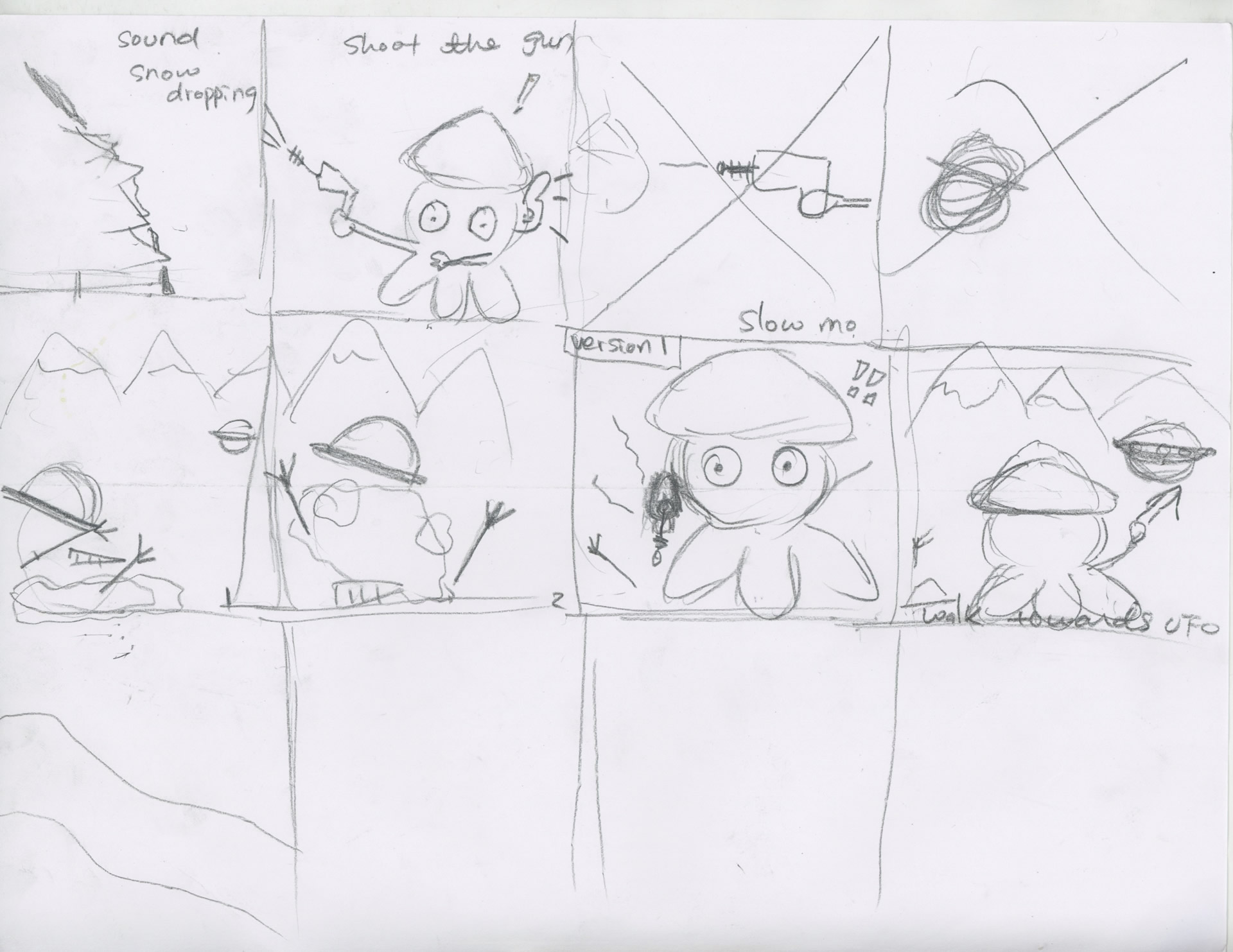

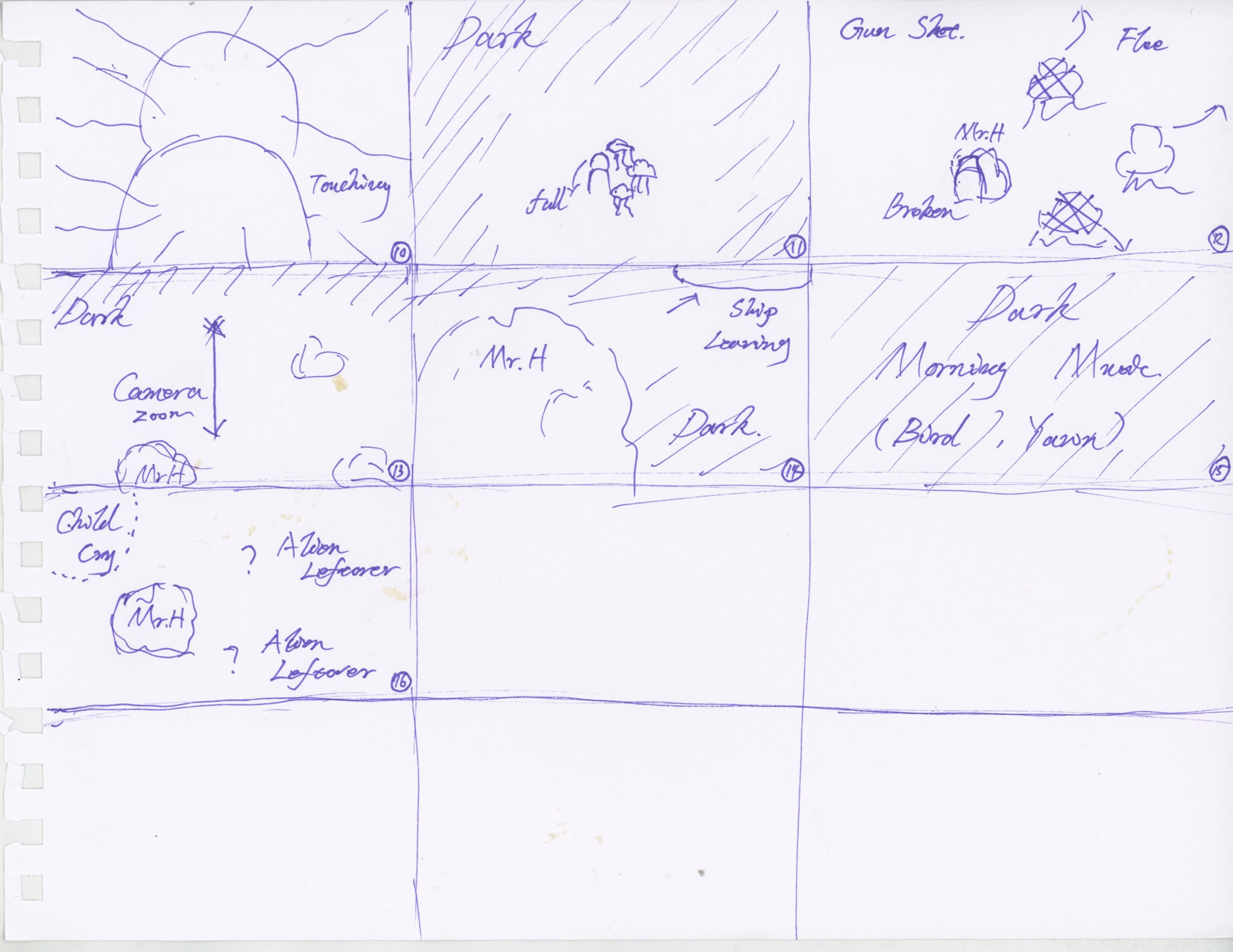

We quickly generated some sketches based on the topic we selected. Highlight include:

We are a team of four, Erik, Claire, Lucky, and Wei where sketching, programming, and broad other design related skills are balanced. From the initial meeting, we quickly communicated that our expectation is maybe not to be the best, but definitely, we want a fine touch animation project generated at the end. Also, we laid a foundation that our project should be easy to be implemented while allowing us to explore different techniques to improve the final results.

Quickly, we decide low-poly is the style we want to go with. Our rationale is that a realistic scene may look good but it lacks variety, i.e. the same scene done by team A will be similar to team B since essentially they result should be as close as to real life which can be dull if it is a daily scenario. Meanwhile, low-poly offers us a completely different view while still allow us to explore conventional techniques.

We brainstormed few directions where we may go. One central guide is that avoid complicated scenarios so we can be focusing on storytelling etc. One strategy is the main character can be a creature floating or moving mechanically like a machine so the moving animation can be simplified. Some generated ideas are as follow:

The story starts with an overview of a lake scene, two characters are introduced while the camera setting tends to lead the viewer thinking this is a fighting ground. At the climax, instead of start fighting, two characters actually sit down and start fishing. The intense of the scenario reduced dramatically toward the end to give audiences a surprise.

The story starts with a fairly small setup within a normal room. The main character is sleeping, then aliens are moved inside from the window and kidnaped the character. At last, the character is sent back and put to sleep again after the alien experiment is done.

Alien visit earth and found a snowman near a small remote town. The alien start exploring and interacting with the snowman. Eventually, the alien is alerted by dropping snow and accidentally shot the snowman then crashed it.

We liked the fishing idea due to the surprise it introduced. However, the fishing requires rendering of water and complicated movement which can be challenging to us. We also like the alien kidnap idea since the main advantage is the story happens within one small room and the alien will probably be floating rather than walking which is easier for us to model. Eventually, we selected the snowman idea since it combines the alien advantage while the snowman is easier to handle. We want to start the project with a relatively simple setup while gradually challenge ourselves moving on.

Steve readdressed our current development with few sample, also ran few tools we use to generate arts and videos. Eventually, a more clear image is set that in the future, our program should generate painting in real time based on captured video while responding to user emotion. Few issues are addressed:

Comparing to optical flow and temporal coherence, I’m more familiar with the solution to make our current video generating program able to respond to the parameter change. The task from now on is adding an API so the program can read parameters from a text file and output results accordingly.

Our current program generates frames from a video in a for-loop by calling a DeepDream routine:

DeepDream(Layer, Guide, Iteration, Octave);

The layer parameter controls which layer the neural network is considered to paint on the canvas, i.e. most likely how the final pattern looks like. The guide controls which portion of the nodes within the layer is prioritized. Iteration controls how heavy the output is altered, the more iteration the more deviation from the final result to the original input. The octave controls the size of the style patch. Larger octave will generate a larger patch.

Our program currently iterates through all frames by a same hardcoded set of parameters. The first step is updating iteration and octave based on keyframe interpolation. For example, if users assign iteration 10 at frame 1 and 20 at frame 5, then the program must interpolate at frame 3, iteration will be 15 if by linear. The interpolation can be linear, cubic etc. which is based on user choice. One issue here is the interpolation may generate float iteration or frame which must be rounded. This may lead to the final frames jump from one to another lacking of smoothness.

The layer and guide should be updated dynamically similar to above. One key difference here is the generated result must be blended since the change will be dramatic. Steve proposed few simple alpha blending solution but I feel we can utilize more advanced blending based on total pixel difference which was covered in my previous computer graphics study. This part is more challenging and also more rewarding. If it can be solved properly, it can also help the float frame issue in step one, even resolve the flickering issue mentioned at the beginning.

Overall I’m quite happy that having a very concrete discussion with Steve today and sorted out the later tasks. Steve not only convey his idea but also talked about some very detailed implementation. I think in the future, only the high-level requirement is necessary to just save some effort. Plus, the alpha blending is really a relief for me since the expectation of our next implementation is not that high. Multimedia and image processing really laid a great foundation of the language we use here in computer graphics today.