The painting project made some progress recently. It now supports linear keyframe interpolation on iteration, octave, layer, and model. The video clip can relatively transit from one style to another. However, temporal coherence is still an issue to be solved. The entire creative team gathered recently which lead to another deeper solution potentially to help all the existing issues.

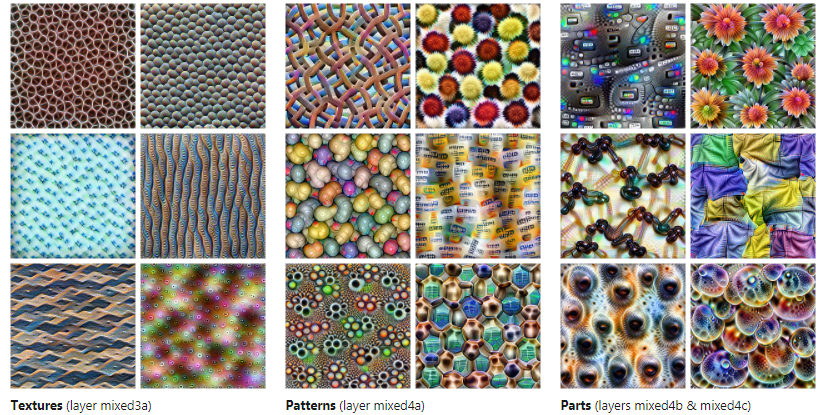

Deep Dream Basics

The essential idea how deep dream apply the style to the image is quite simple. The algorithm run over an image array and generate RGB delta of each pixel based on the original value. Then, this value is recursively enhanced by apply same technique again and again. Essentially, the out equals to original pixel value plus deep dream delta.

Image Blending Issue

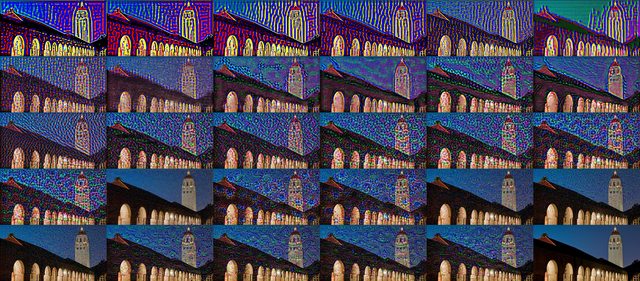

Based on above information, instead of using alpha blending on two generated images, which can lead to significant “ghost” artifacts, we can simple dream on different style with various iterations. For example, instead of alpha blend two images from style A, 70% alpha, 10 iterations to B, 30% alpha, 10 iterations, we can simply apply A style iteration 7 times, then B style iteration 3 times recursively on the same image.

The only issue about this new method is the order of the iterations. They can be mixed ABABA, BAAAA or BBBAA, AAAAA or AAAAA, AABBB. The results from these methods will be different. An evaluation of the results will be required after the code is done.

Temporal Coherence

This issue has already been addressed again and again. Previously, by using optical flow to direct the dreaming is regarded to be one of the best solutions. However, detailed implementation is unknown. Now, we can finally apply the vector results from the optical flow to the deep dream delta result to redirect the result.

One concern is we still want the dream progress based on time. For example, if a sun moving from left to right, the expected results say a flam texture is gradually evolving while the sun is moving instead of a fixed texture on the location where the sun is. In that way, one evaluation must be done to test will slight different from the source image make a huge change for the final dreamed result. Only if it is negative, we might further utilize the optical flow method mentioned.

AI Avatar Meeting

I also attended the other meeting about an AI avatar program, an immersive talk bot. Few challenges are addressed there including:

- Implementing more XML command to adjust talking environment light, avatar body movement etc.

- Build a queue system to handle multiple gestures if they overlap together

- Research potential solution move the project to Unity

In the future, I may also help out the team to do some programming about above issues.