The term is ending very soon. I’ll be continuing on the project as part-time till early next year and we do have few other directions potentially can go.

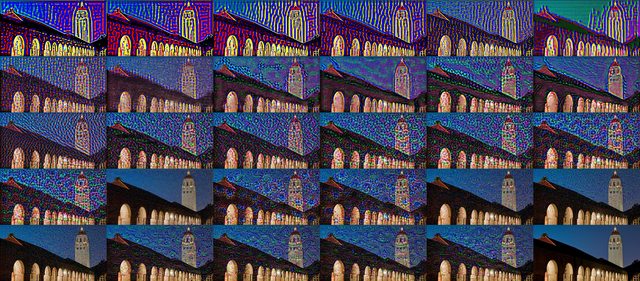

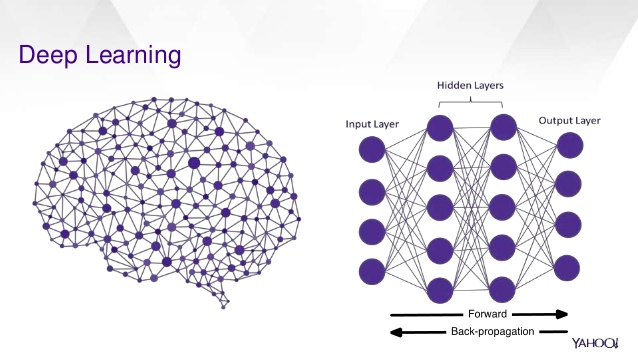

Iteration Blending

The blending based on iteration is fully functional. If the configuration requires 10 iterations in total to dream on one frame, the blending algorithm will dream 1 time for style A, then 9 times for style B. Next frame, the logic will apply 2 times A style dreaming and 8 times style B dreaming. This pattern continues until the style fully moved from style B to A. Right now a recursion algorithm is applied to implement this idea. Performance is not that good where roughly takes 10 seconds to finish a 10 iteration frame (2 passes). The result is much improved in regard to the ghost effect of the alpha blending technique.

Refactoring & Python

Some heavy refactoring is taking place recently and few interesting observations are done. Python does offer fairly strong support for scientific researching with easy API for image processing, video analysis, high dimensional array. However, so far my experience with it is still bad. The style of the programming is more like a quick mockup to implement an idea rather than seriously taking care of the integrity of the code.

One example can be the epic indentation logic to decide code block. For a giant nested control statement like if, for etc. It is quite likely the ending become chaotic where statements are floating around and no clue who belongs to who. For me, this is purely a design flaw that I gain nothing while only introducing trouble later to maintain. And my conclusion is based on that a good design is able to produce robust code logic not only for now but for future reference while simplified syntax is ONLY a bonus if the earlier foundation can be laid properly.

for x in range(0, n):

# Do some stuff here...

for y in range(0, n):

# Do other stuff here...

if foo is None:

# Keep doing stuff...

# Indentation here will be confusing who it belongs to.<span data-mce-type="bookmark" style="display: inline-block; width: 0px; overflow: hidden; line-height: 0;" class="mce_SELRES_start"></span>

Variable Parameter Passing

In Python, *args and **kwargs can be used to pass an indefinite number of parameters to a function in the function definition. This feature is also common for other programming languages. It helps some scenarios where the input of the function cannot be predetermined but still following a pattern. For example, a function converts a string user input to a book title style with uppercase staring characters. To simplify the logic, a user can separate an arbitrary number of strings and pass as parameters instead of containing them in a collection object, say array or list. Specifically, this logic is introduced in our dreaming code:

dreamed_frame = deep_dream(

net,

input_frame,

image_type=image_type,

verbose=verbose,

iter_n=int(key_frames[i].iteration.first),

octave_n=int(key_frames[i].octave.first),

octave_scale=octave_scale,

step_size=stepsize,

jitter=jitter,

guide_image=guide_image,

end=end_param,

)

def deep_dream(

net,

base_img,

image_type,

iter_n=10,

octave_n=4,

octave_scale=1.4,

end='inception_4c/output',

clip=True,

verbose=1,

guide_image=None,

**step_params

):<span data-mce-type="bookmark" style="display: inline-block; width: 0px; overflow: hidden; line-height: 0;" class="mce_SELRES_start"></span>

# Do stuff...

for i in xrange(iter_n):

make_step(net, end=end, clip=clip, guide_features=guide_features,

**step_params)

# Do stuff...

For the code above, the “deep_dream” function is called with procedure “make_step” inside. In line 27, the “step_params” is defined can be multiple parameters and directly passed to the “make_step” procedure in line. The actual parameter passing is in line 12 where the “end_param”.

One good thing about this is it makes calling function able to separate the immediate parameters with the ones will be passed later to other nested functions. For example, in a real-life cookie making scenario, Annie is dedicated to cookie making while Joe is dedicated to plate cleaning. If I want a plate of cookies, the traditional way is asking Annie:

“Hi, Annie, would you make a plate of cookie for me with powder A, salt B, blueberry C, plate D, kitchen cleaner F, and paper towel E?”

Annie accept the task then ask:

“Hi, Joe, would you prepare me a container with plate D, kitchen cleaner F, and paper towel E?”.

Later on, Annie will be able to make the cookie with the plate Joe prepared. To use the variable parameter, I will ask:

“Hi, Annie, would you make a plate of cookie for me with powder A, salt B, blueberry C, and stuff for Joe?”

The stuff for Joe will contain “plate D, kitchen cleaner F, and paper towel E” which Annie is not sure about due to her dedication to cookie making. Then, Annie can acquire the plate by asking:

“Hi, Joe, would you prepare me a container with stuff I got from Wei?”.

Essentially, the same scenario can happen in the programming world. The variable parameter allows programmer passing parameters without knowing the actual content of them which are encapsulated inside the *args pointer. This reduces the risk that mistakenly using the parameters since the validation will be done in the nested functions, Joe.

However, there are few drawbacks too with this idea. First, the programmer will be hidden from the passing parameters. If something is wrong and the programmer is forced to dig into the nested function, Joe, it is difficult to debug since which parameter is passed is unknown and all appended to that pointer. Second, the nested logic is not recursive. Say I pass the information, ingredient, plate, and water switch to Annie, Annie pass plate and water switch to Joe, Joe cannot pass the same thing, plate, water switch to plumber Smith but have to trim the plate and only pass the water to Smith. Although the trim can be done in advance with a nested pointer at the beginning, it is just too much trouble.

In conclusion, this variable parameter is more likely have no special application than the typical scenario other programming language offers. Say, an undetermined number of user input needs to be handled. And from my past experience, this situation is not that common. The particular case we have in the deep dreaming algorithm seems not really on point to practice the variable parameter idea since the input is fixed and the drawback of such implementation is more significant.

Named Tuple

In mathematics a tuple is a finite ordered list (sequence) of elements. An n-tuple is a sequence (or ordered list) of n elements, where n is a non-negative integer. There is only one 0-tuple, an empty sequence. An n-tuple is defined inductively using the construction of an ordered pair. — Wikipedia

One example is a Point(x, y) can be a tuple consist of x and y value. Python example can be found as follow:

# Declare and instantiate tuple.

tup1 = ('physics', 'chemistry', 1997, 2000);

tup2 = (1, 2, 3, 4, 5);

tup3 = "a", "b", "c", "d";

# Access tuple.

print "tup1[0]: ", tup1[0];

print "tup2[1:5]: ", tup2[1:5];

This feature can be very helpful for some light weighted object say a point, instead of creating your own object, just use a tuple. However, one problem is the naming is less convenient since p[0] has little connection with x coordinate of a point. The solution is named tuple:

from collections import namedtuple

Point = namedtuple('Point', 'x y')

pt1 = Point(1.0, 5.0)

pt2 = Point(2.5, 1.5)

# Compute displacement from two points.

from math import sqrt

line_length = sqrt((pt1.x - pt2.x) ** 2 + (pt1.y - pt2.y) ** 2)

One thing about named tuple is that it is likely always better than the nameless tuple. Unless it is in a context impossible to name the tuple. To utilize this feature, now all the keyframes are built in named tuple so frame[i].iteration.first and frame[i].iteration.second will be the two weights for the iteration blending mentioned at the very beginning.

In contrast, C++ seems to have the similar idea to encapsulate in a light-weighted object while Java, as expected, does not have. It is more like a culture thing that Java tends to be robust while rarely predict the specific user scenario. So Java users are expected to implement their own light-weighted object for the tuple, at least pair (xxx.first & xxx.second).