Future System

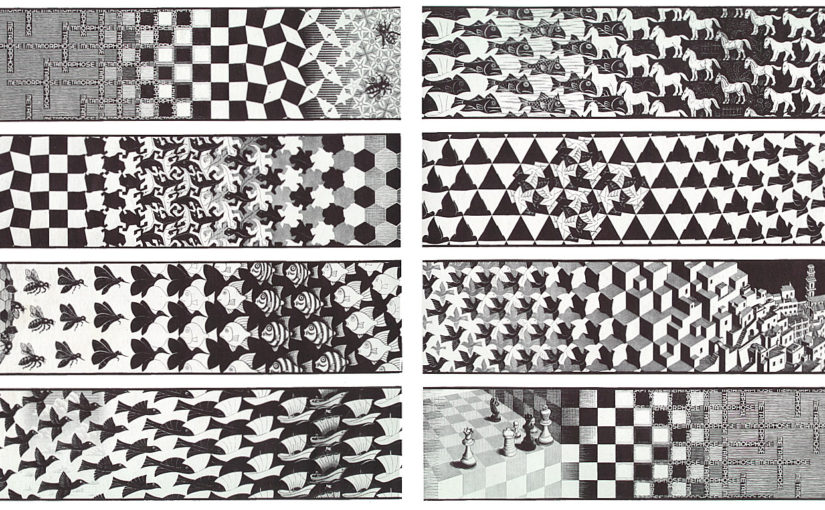

Steve readdressed our current development with few sample, also ran few tools we use to generate arts and videos. Eventually, a more clear image is set that in the future, our program should generate painting in real time based on captured video while responding to user emotion. Few issues are addressed:

- Our current advantage in this field is generated art based on parameters which lead to being able to alter video results based on the input. Not implemented yet but possible

- For a real-time application, performance is a key issue. Our current videos are not generated in real time but we do optimize the result by generating one result every three frames. Users had feedback that the art displayed in this rate is better visualized compare to one result per frame due to having more time to experience the work

- Our current result is lacking temporal coherence. Users experience flicking, discontinuing of texture on moving object during the video. This issue might be resolved by applying generation based on optical flow. An interesting video can be found here:

Work to Do

Comparing to optical flow and temporal coherence, I’m more familiar with the solution to make our current video generating program able to respond to the parameter change. The task from now on is adding an API so the program can read parameters from a text file and output results accordingly.

Our current program generates frames from a video in a for-loop by calling a DeepDream routine:

DeepDream(Layer, Guide, Iteration, Octave);

The layer parameter controls which layer the neural network is considered to paint on the canvas, i.e. most likely how the final pattern looks like. The guide controls which portion of the nodes within the layer is prioritized. Iteration controls how heavy the output is altered, the more iteration the more deviation from the final result to the original input. The octave controls the size of the style patch. Larger octave will generate a larger patch.

First Step

Our program currently iterates through all frames by a same hardcoded set of parameters. The first step is updating iteration and octave based on keyframe interpolation. For example, if users assign iteration 10 at frame 1 and 20 at frame 5, then the program must interpolate at frame 3, iteration will be 15 if by linear. The interpolation can be linear, cubic etc. which is based on user choice. One issue here is the interpolation may generate float iteration or frame which must be rounded. This may lead to the final frames jump from one to another lacking of smoothness.

Second Step

The layer and guide should be updated dynamically similar to above. One key difference here is the generated result must be blended since the change will be dramatic. Steve proposed few simple alpha blending solution but I feel we can utilize more advanced blending based on total pixel difference which was covered in my previous computer graphics study. This part is more challenging and also more rewarding. If it can be solved properly, it can also help the float frame issue in step one, even resolve the flickering issue mentioned at the beginning.

Overall I’m quite happy that having a very concrete discussion with Steve today and sorted out the later tasks. Steve not only convey his idea but also talked about some very detailed implementation. I think in the future, only the high-level requirement is necessary to just save some effort. Plus, the alpha blending is really a relief for me since the expectation of our next implementation is not that high. Multimedia and image processing really laid a great foundation of the language we use here in computer graphics today.